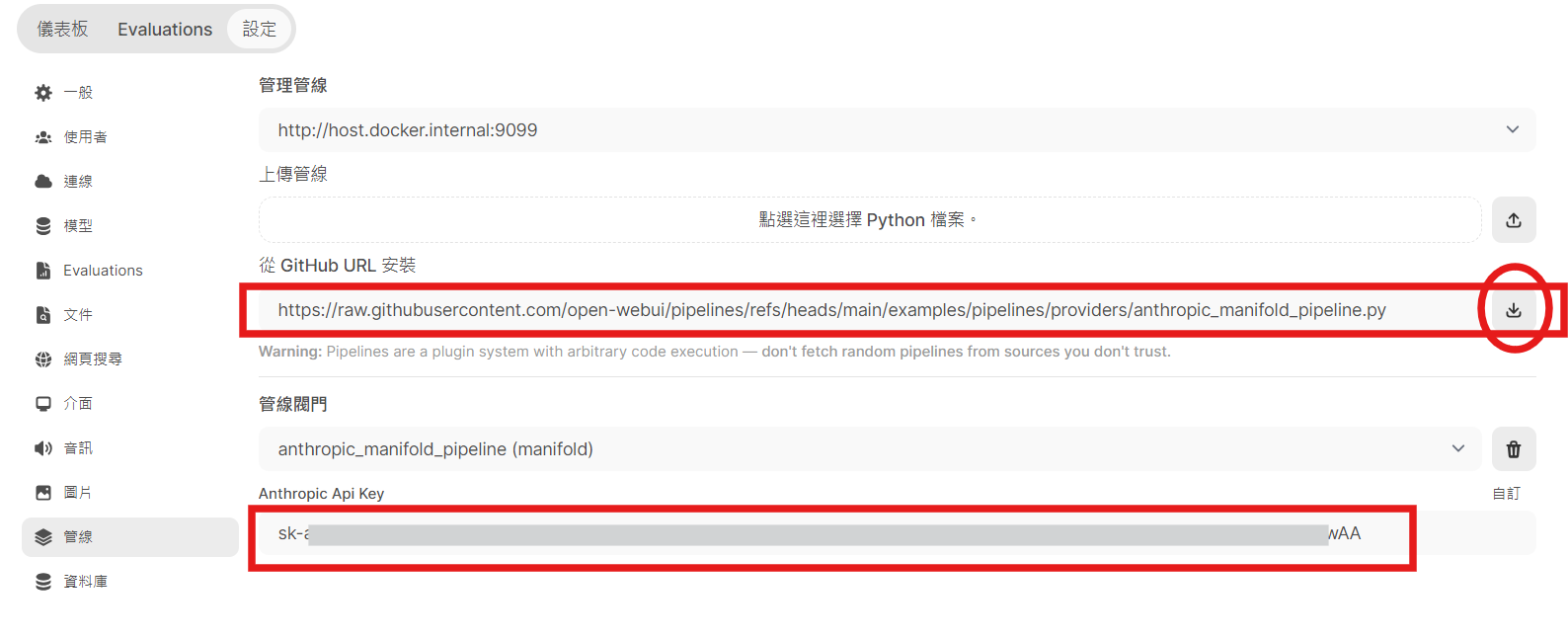

Repo - https://github.com/open-webui/pipelines/tree/main/examples/pipelines

https://raw.githubusercontent.com/open-webui/pipelines/refs/heads/main/examples/pipelines/providers/google_manifold_pipeline.py++

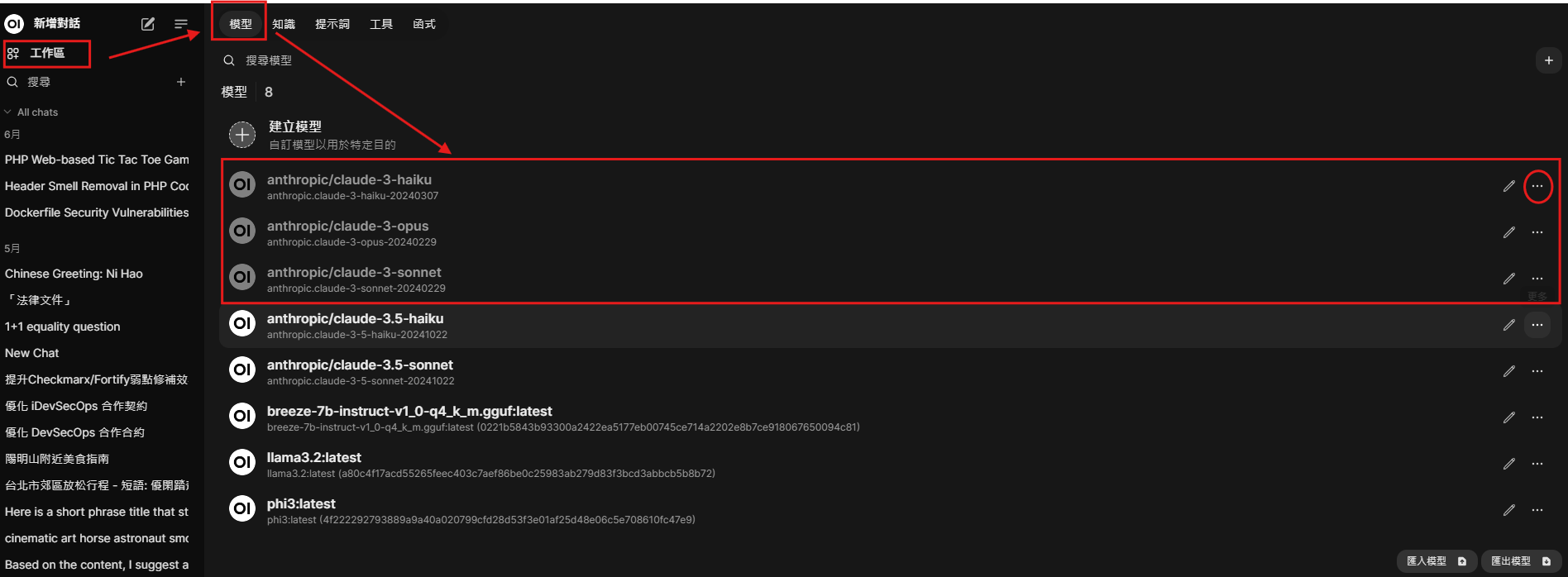

==== Model =====

* ycchen/breeze-7b-instruct-v1_0

* llama3

* codestral

* 下載語法 Exp. ycchen/breeze-7b-instruct-v1_0 docker exec ollama ollama pull ycchen/breeze-7b-instruct-v1_0

==== 評估安裝環境運行 Model 的效能 =====

* Exp. phi3

pve-ollama-221:~# docker exec ollama ollama list

NAME ID SIZE MODIFIED

phi3:14b 1e67dff39209 7.9 GB 5 days ago

codestral:latest fcc0019dcee9 12 GB 5 days ago

yabi/breeze-7b-32k-instruct-v1_0_q8_0:latest ccc26fb14a68 8.0 GB 5 days ago

phi3:latest 64c1188f2485 2.4 GB 5 days ago

pve-ollama-221:~# docker exec -it ollama ollama run phi3 --verbose

>>> hello

Hello! How can I assist you today? Whether it's answering a question, providing information, or helping with a task, feel free to ask.

total duration: 3.119956759s

load duration: 905.796µs

prompt eval count: 5 token(s)

prompt eval duration: 101.53ms

prompt eval rate: 49.25 tokens/s

eval count: 32 token(s)

eval duration: 2.889224s

eval rate: 11.08 tokens/s

===== VM 內直接安裝與測試 Ollama =====

* 安裝程序

curl -fsSL https://ollama.com/install.sh | sh

* ++看安裝紀錄|<cli>

iiidevops@pve-ollama:~$ curl -fsSL https://ollama.com/install.sh | sh

»> Downloading ollama…

######################################################################## 100.0%##O#- #

»> Installing ollama to /usr/local/bin…

[sudo] password for iiidevops:

»> Creating ollama user…

»> Adding ollama user to render group…

»> Adding ollama user to video group…

»> Adding current user to ollama group…

»> Creating ollama systemd service…

»> Enabling and starting ollama service…

Created symlink /etc/systemd/system/default.target.wants/ollama.service → /etc/systemd/system/ollama.service.

»> The Ollama API is now available at 127.0.0.1:11434.

»> Install complete. Run “ollama” from the command line.

WARNING: No NVIDIA/AMD GPU detected. Ollama will run in CPU-only mode.

</cli>